Summarize anything with the Universal Summarizer

Universal Summarizer is an AI-powered tool for instantly summarizing just about any content of any type and any length, by simply providing a URL address (and soon by uploading a file).

One of the most applauded aspects of the Universal Summarizer is its efficiency. Users have commented on how the tool enables them to quickly understand complex documents without spending hours reading through them. This has been particularly beneficial for professionals needing to stay updated on the latest industry news and trends.

Currently supported document types are a website or a page, PDFs, YouTube videos, and Podcasts/audio files (mp3/wav/aac).

Universal Summarizer is available as a web app for all Kagi Search users (you can create a free trial account to try it) or as an API. You can also use it through Kagi Search extension or through Zapier integration in your programmatic workflows.

Note: We’ll probably have hiccups for a day or two post-launch as there is a lot of new infrastructure to support all of this. Let us know if you encounter any difficulties by emailing support@kagi.com.

Table of Contents

- What is new?

- Supported input content types

- Try it

- API

- Pricing

- Integrations

- Summarization bang

- Zapier Integration

- Kagi Search extension (coming soon)

- Roadmap

- Frequently Asked Questions

What is new?

We have been working hard to improve Universal Summarizer since we launched the technology preview almost two months ago.

The main improvements are:

- Improved summarization engine: better, more informative and faster summaries

- Much more robust content extraction (we significantly reduced failure rates)

- Support for real-time audio file summarization (mp3/wav)

- Support for HN thread summarization (by popular demand)

- Option to specify output language (independent of the source language)

- Two summarization engines to choose from + enterprise-grade summarization engine

Supported input content types

Universal Summarizer is able to numerous types of content.

Website or a web page, including articles and blog posts. Example.

PDF documents. Example.

PowerPoint (.pptx) documents. Example

Audio/Podcasts. Supported mp3 and wav formats. 1 hour of audio is transcribed in real time in about 10 seconds. Example.

YouTube videos. We are currently using available video transcripts. In the future we will be summarizing videos directly. Example.

Special content types include:

Try it

You can find the Universal Summarizer web app at kagi.com/summarizer. This will require a Kagi account, which you can create for free here.

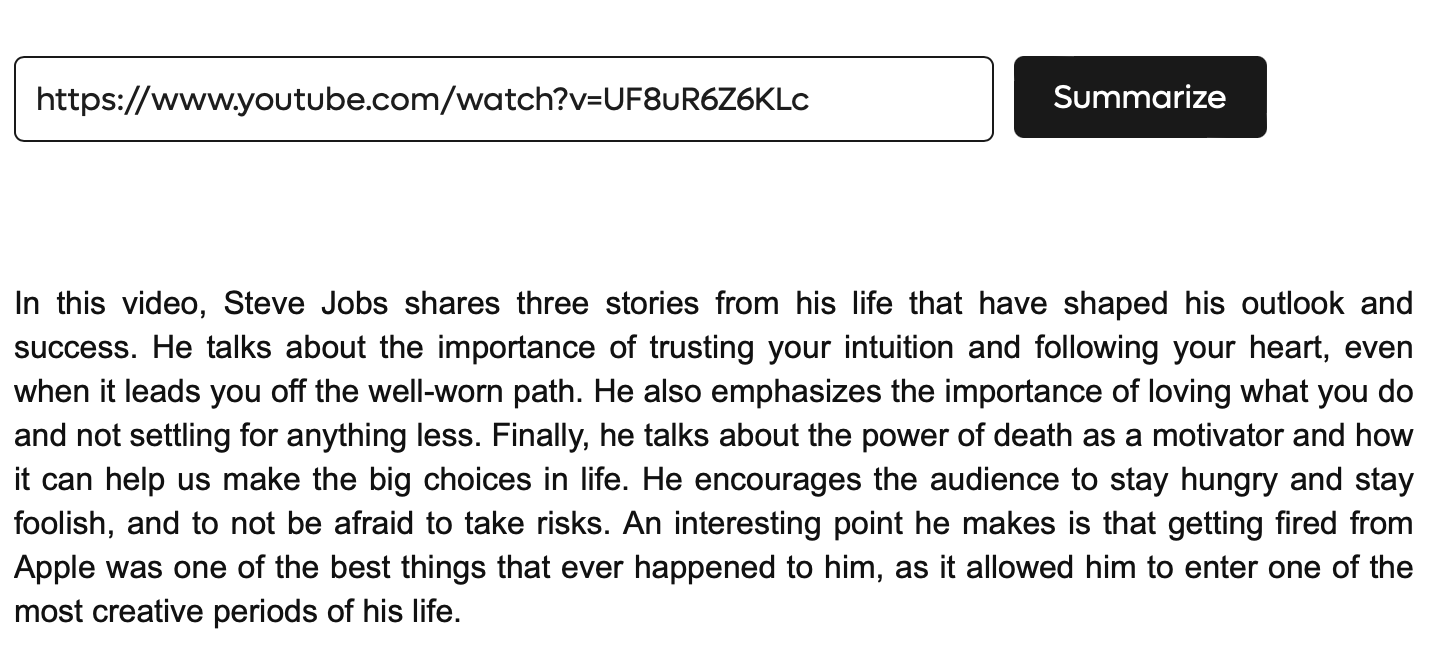

Paste in the URL to the document and click Summarize! It is that simple.

Everything else is done transparently in the background without any user input. You can even specify the language you want to use for the output (this is independent of the language the document is in). We currently support 30 output languages for the summaries.

You can also specify one of two summarization engines available:

- Agnes (default): Formal, technical, analytical summary

- Daphne: Informal, creative, friendly summary

API

Universal Summarizer has a programmatic API available.

Get an API key here (requires a Kagi Search account), or view API documentation.

Pricing

For API usage, the price is $0.030 per 1,000 tokens processed or $0.025 per 1,000 tokens processed if you are on the Kagi Ultimate plan.

The billing is capped at 10,000 tokens regardless of the length of the document. This means that the maximum cost to summarize a document (of any length) is $0.30.

When using the web app, summaries are charged as a part of your Kagi subscription according to AI usage pricing.

Universal Summarizer can also be accessed through Kagi Search as the Summarize page feature in search results.

We are also announcing Muriel, our enterprise-grade summarization engine available for businesses. Muriel features unprecedented quality summaries and a fixed cost of summarization of $1/summary, regardless of the document length. Muriel is ideal for newsrooms, legal teams, research, and other activities where fast access to high-quality summaries is imperative. Contact Vladimir Prelovac at vlad@kagi.com for a demonstration and more information.

Integrations

Summarization bang

If you use Kagi Search, you can now type “!sum [url]” to directly open Universal Summarizer for that URL. In addition, you can use !sumt to request “key moments”.

This is a convenient way to summarize any web document directly from your browser’s address bar.

Zapier integration

Universal Summarizer is available as a Zapier integration allowing you to quickly integrate summarization into your programmatic workflows.

Summarize an article before posting to social media? We got you covered.

Check out Universal Summarizer Zapier integration.

Kagi Search extension (coming soon)

Kagi Seach extensions for Chrome and Firefox will soon support requesting a document summary straight from the web page. You need to be logged in to your Kagi account to use it.

In addition, these extensions are open-source, and feel free to improve the integration and submit a pull request.

Roadmap

- Add support for uploading files or pasting text

- Specify summary focus (“focus on xyz”)

- Interactive chat mode with the document a’la Ask Questions about Document feature in Kagi search

- Support for more input file types and specialized importers (images, spreadsheets, …)

Frequently Asked Questions

Q. Why use Universal Summarizer over an LLM?

A. Comparing a Universal Summarizer and a typical LLM is only partially appropriate, as these tools serve different purposes. LLMs are good at a lot of things, where Universal Summarizer tries to do only one thing and be best possible at it.

For example while an LLM can summarize text, it lacks the functionality to process information from various sources, such as content directly from URLs. In other words, there is additional work involved in getting the content in a form that an LLM can process (cleaned text).

The Universal Summarizer, on the other hand, offers a comprehensive infrastructure that enables it to automatically extract, parse, clean and process content from web pages, PDFs, and even YouTube videos. It can even transcribe and summarize audio files in real-time, providing users with a seamless and convenient experience.

Furthermore, the Universal Summarizer’s ability to handle unlimited tokens sets it apart from most LLMs currently available. The Universal Summarizer offers a more versatile and robust solution for summarizing and processing information across multiple formats and sources.

Q. How does Universal Summarizer compare to GPT4?

A. While GPT-4 can summarize a larger number of tokens than GPT-3.x (up to 32,000 tokens), the Universal Summarizer surpasses this limitation, capable of summarizing documents containing 50,000 or even 200,000 tokens.

The Universal Summarizer is a more affordable and faster option in terms of cost and efficiency. Summarizing 32,000 tokens using GPT-4 would cost $1.92 (32,000 x $0.06), whereas the same task would cost only $0.30 (10,000 x $0.03) with the Universal Summarizer. This is due to the 10,000 billable tokens limit, ensuring that every summary is, at most, a $0.30 charge.

The Universal Summarizer offers greater flexibility in handling token limits and provides a more cost-effective and efficient solution than GPT-4.

Q. Can you give an overview of how the Universal Summarizer works? How do you get around the context length limitation?

A. We use a completely different approach for summarization that does not have any token limit.

Q. Why is an account required to use Universal Summarizer?

A. The main reason is cost control. The last time we did this without accounts, the service got hammered by bots and that was an expensive lesson.

Q. What is the difference between Agnes and Muriel (enterprise) model?

A. Summary comparison between Agned and Muriel on “Scaling Language Models: Methods, Analysis & Insights from Training Gopher” https://arxiv.org/pdf/2112.11446.pdf

Agnes: The PDF document titled “Scaling Language Models: Methods, Analysis & Insights from Training Gopher” presents an analysis of Transformer-based language model performance across a wide range of model scales, from models with tens of millions of parameters up to a 280 billion parameter model called Gopher. The models are evaluated on 152 diverse tasks, achieving state-of-the-art performance across the majority. The study finds that gains from scale are largest in areas such as reading comprehension, fact-checking, and the identification of toxic language, but logical and mathematical reasoning see less benefit. The document also provides a holistic analysis of the training dataset and models behavior, covering the intersection of model scale with bias and toxicity. Finally, the study discusses the application of language models to AI safety and the mitigation of downstream harms. The key implication of this study is that larger language models can significantly improve performance on a wide range of natural language processing tasks, but there are also concerns about the potential for bias and toxicity in these models.

Muriel: This PDF document presents an analysis of Transformer-based language model performance across various scales, focusing on a 280 billion parameter model called Gopher. The models are evaluated on 152 diverse tasks, achieving state-of-the-art performance in the majority of them. The most significant gains from scaling are observed in areas such as reading comprehension, fact-checking, and toxic language identification, while logical and mathematical reasoning see less benefit.

The paper also provides a holistic analysis of the training dataset and the model’s behavior, examining the intersection of model scale with bias and toxicity. It discusses the application of language models to AI safety and the mitigation of downstream harms.

Key findings include:

1. Gopher outperforms current state-of-the-art language models in approximately 81% of tasks with comparable results, particularly in knowledge-intensive domains such as fact-checking and general knowledge.

2. Larger models are more likely to generate toxic responses when provided with toxic prompts, but they can also more accurately classify toxicity.

3. Gains from scale are largest in areas like reading comprehension, fact-checking, and the identification of toxic language. However, logical and mathematical reasoning see less benefit.

The document also discusses challenges and future directions, such as:

1. Towards efficient architectures: The need for more efficient architectures to reduce the computational cost of training and inference.

2. Challenges in toxicity and bias: Addressing the issues of toxicity and bias in language models to ensure safe and fair AI systems.

3. Safety benefits and risks: Balancing the potential benefits of language models in AI safety with the risks associated with their misuse.

In conclusion, the paper highlights the improved performance of large-scale language models like Gopher in various tasks and emphasizes the importance of addressing challenges related to toxicity, bias, and AI safety.

Q. My company is interested in access to Muriel, how do we reach out?

A. Please contact Vladimir Prelovac at vlad@kagi.com