Kagi's approach to AI in search

Kagi Search is pleased to announce the introduction of three AI features into our product offering.

We’d like to discuss how we see AI’s role in search, what are the challenges and our AI integration philosophy. Finally, we will be going over the features we are launching today.

Table of Contents

- Kagi’s AI history

- Why AI matters in the context of search

- Kagi AI integration philosophy

- How good are AI “Answering Engines” really?

- What are we launching today?

- Summarize Results

- Summarize Page

- Ask Questions about Document

- AI Usage Pricing

- Universal Summarizer

- Frequently Asked Questions

Kagi’s AI History

Not a lot of people know that Kagi started as Kagi.ai in 2018 and has a long heritage of utilizing AI.

During those early days, we were passionate about AI’s potential, particularly in question-answering and summarization. This was when the Transformer model, which paved the way for modern LLMs (large language models), was just invented in 2017. However, the initial models were small and pale compared to the immense LLMs today.

In those early days, it took a lot of creativity and innovation to extract the full potential of AI. Today, the power of LLMs such as GPT is so great that “the hottest new programming language is English,” as Andrej Karpathy put it. This has democratized the ability to “create magic,” allowing even small companies to compete with industry giants, as we demonstrated later in this text.

The early work we did in the field included the following:

- Working on state-of-the-art question-answering models in 2019 link.

- Developing an early prototype of Kagi search with question answering capabilities (2019) link.

- Video question answering capabilities (2019) link.

- Making significant progress in text understanding and summarization, leading to the development of the Universal Summarizer link.

- Contributing to the academic community by publishing research on using embeddings to represent the physical world, which could be a factor in advancing AGI link.

- Even a sci-fi story featuring AI as the main character link.

Following 2019, we placed most of these endeavors on hold to concentrate on developing the finest search product. Our early efforts, however, enabled us to use machine learning to deliver answers to questions directly within the search experience, making us the only search engine besides Google and Bing able to do so when we launched the public beta in 2022..

With the recent advancements in AI, our interest in exploring how it can improve search has reignited. Last year we published technology demonstrations for AI in search and AI in browser and today we are unveiling integrations based on this demos directly in Kagi search.

Why AI matters in the context of search

Modern search engines can indeed satisfy the majority of existing searches and one could say that AI is simply not needed. However, it is important to note that the searches being made today only represent a small fraction of the total possible searches that can be made (probably less than 10%). The emergence of generative AI will enable a new paradigm in search that can unlock a whole new category of previously impossible searches.

Despite mainstream search engines having a 25-year lead with sophisticated technologies and vast resources, they still fail to provide a straightforward answer to simple query like “do more people live in new york or mumbai” This leaves users with a series of links to follow, making the search process tedious and inefficient. Such order of business intrinsically benefits the ad-supported business models of mainstream search engines, by expanding the surface of ad-serving opportunities.

Generative AI on the other hand can automatically process and analyze information to answer questions like the one above, thus giving users exactly what they want.

Let’s take another example. A query like “give me the names of all palo alto based ceos that run companies in the search space with less than 50 employees” is not a thing today simply because current mainstream search engines cannot satisfy this directly. It would take a human with a search engine probably around 5-15 minutes to extract this information. This type of search represents a real need and can be fulfilled by search + generative AI in seconds in the future.

Finally, complex queries like “summarize the latest research breakthroughs on lung cancer” can be a daunting task for an average user who might end up reading countless pages of documents. However, with the help of AI, which can do several searches and process dozen of research papers automatically, users could get a summary with citations in a matter of seconds.

These examples highlight the immense potential of AI in expanding the boundaries of what we perceive as possible searches. Of course, much will depend on the incentives in place with any such AI integration. If the purpose of generative AI is to “create new value for advertisers” this may not lead to desired outcomes and advance us forward. Aligning incentives is necessary for continued progress towards the user-centric future of search.

Kagi AI integration philosophy

Generative AI is a hot topic, but the technology still has flaws. Critics of AI go as far to say that “[AI] will degrade our science and debase our ethics by incorporating into our technology a fundamentally flawed conception of language and knowledge”.

From an information retrieval point of view, relevant to our context of a search engine, we should acknowledge the two main limitations of the current generation of AI.

Large language models (LLMs) should not be blindly trusted to provide factual information accurately. They have a significant risk of generating incorrect information or fabricating details (confabulating). This can easily mislead people who are not approaching LLMs pragmatically. (This is a product of auto-regressive nature of these models where the output is predicted one token at a time, and once it strays away from the “correct” path, for which the probablity grows exponentially with the length of the output, it is “doomed” to the end of output, without the ability to plan ahead or correct itself).

LLMs are not intelligent in the human sense. They have no understanding of the actual physical world. They do not have their own genuine opinions, emotions, or sense of self. We must avoid attributing human-like qualities to these systems or thinking of them as having human-level abilities. They are limited AI technologies. (In a way, they are similar to how a wheel can get us from point A to point B, sometimes much more efficiently than human body can, but it lacks the ability to plan and the agility of human body to get us everywhere a human body can)

These limitations required us to pause and reflect on the impact on search experience, before incoporating this new technology for our customers. As a result, we came up with an AI integration philosophy that is guided by these principles:

- AI should be used in closed, defined context relevant to search (don’t make a therapist inside the search engine, for example)

- AI should be used to enhance the search experience, not to create it or replace it (meaning AI is opt-in and on-demand, similar to how we use JavaScript in Kagi, where search still works perfectly fine when JS is disabled in the browser)

- AI should be used to the extent that it enhances our humanity, not diminish it (AI should be used to support users, not replace them)

While it’s important to use AI tools responsibly and not overly rely on them, the design of these tools can sometimes make it difficult.

Therefore, a well-designed tool should have features that allow for a seamless transfer of control back to the user when the tool fails, such as an autopilot system in aviation that can pass control back to the pilot when it fails or be overridden by the pilot at any time.

Additionally, an AI tool should be able to indicate when it has low confidence in its answers, especially when dealing with uncertain data so that users can make informed decisions based on the tool’s limitations. Regrettably, current large language models (LLMs) lack a “ground truth” understanding of the world, making such evaluations unattainable. This remains a fundamental challenge with the present generation of LLM architecture, a problem that all contemporary generative AI systems share.

How good are AI “Answering Engines” really?

When implementing a feature of this nature, it is crucial to establish the level of accuracy that users can anticipate. This can be accomplished by constructing a test question dataset encompassing challenging and complex queries, typically necessitating human investigation but answerable with certainty using the web. It is important to note that AI answering engines aim to streamline the user’s experience in this realm. To that end, we have developed a dataset of ‘hard’ questions from the most challenging we could source from Natural Questions dataset, Twitter and Reddit.

The questions included in the dataset range in difficulty, starting from easy and becoming progressively more challenging. We plan to release the dataset with the next update of the test results in 6 months. Some of the questions can be answered “from memory,” but many require access to the web (we wanted a good mix). Here are a few sample questions from the dataset:

- “Easy” questions like “Who is known as the father of Texas?” - 15 / 15 AI providers got this right (all AI providers answered only four other questions).

- Trick questions like “During world cup 2022, Argentina lost to France by how many points?” - 8 / 15 AI providers were not fooled by this and got it right.

- Hard questions like “What is the name of Joe Biden’s wife’s mother?” - 5 / 15 AI providers got this right.

- Very hard questions like “Which of these compute the same thing: Fourier Transform on real functions, Fast Fourier Transform, Quantum Fourier Transform, Discrete Fourier Transform?” that only one provider got right. (thanks to @noop_noob for suggesting this question on Twitter.

In addition to testing Kagi AI’s capabilities, we also sought to assess the performance of every other “answering engine” available for our testing purposes. These included Bing, Neeva, You.com, Perplexity.ai, ChatGPT 3.5 and 4, Bard, Google Assistant (mobile app), Lexii.ai, Friday.page, Komo.ai, Phind.com, Poe.com, and Brave Search. It is worth noting that all providers, except for ChatGPT, have access to the internet, which enhances their ability to provide accurate answers. As Google’s Bard is not yet officially available, we opted to test the Google Assistant mobile app, considered state-of-the-art in question-answering on the web just a few months ago. Update 3 / 21: We now include Bard results.

To conduct the test, we asked each engine the same set of 56 questions and recorded whether or not the answer was provided in the response. The answered % rate reflects the number of questions correctly answered, expressed as a percentage (e.g., 75% means that 42 out of 56 questions were answered correctly).

And now the results.

| Answering engine | Questions Answered | Answered % |

|---|---|---|

| Human with a search engine [1] | 56 | 100.0% |

| ——————————- | ——————– | ———- |

| Phind | 44 | 78.6% |

| Kagi | 43 | 76.8% |

| You | 42 | 75.0% |

| Google Bard | 41 | 73.2% |

| Bing Chat | 41 | 73.2% |

| ChatGPT 4 | 41 | 73.2% |

| Perplexity | 40 | 71.4% |

| Lexii | 38 | 67.9% |

| Komo | 37 | 66.1% |

| Poe (Sage) | 37 | 66.1% |

| Friday.page | 37 | 66.1% |

| ChatGPT 3.5 | 36 | 64.3% |

| Neeva | 31 | 55.4% |

| Google Assistant (mobile app) | 27 | 48.2% |

| Brave Search | 19 | 33.9% |

[1] Test was not timed and this particular human wanted to make sure they were right

Disclaimer: Take these results with a grain of salt, as we’ve seen a lot of diversity in the style of answers and mixing of correct answers and wrong context, which made keeping the objective score challenging. The relative strength should generally hold true on any diverse set of questions.

Our findings revealed that the top-performing AI engines exhibited an accuracy rate of approximately 75% on these questions, which means that users can rely on state-of-the-art AI to answer approximately three out of four questions. When unable to answer, these engines either did not provide an answer or provided a convincing but inaccurate answer.

ChatGPT 4 has shown improvement over ChatGPT 3.5 and was close to the best answering engines, although having no internet access. This means that access to the web provided only a marginal advantage to others and that answering engines still have a lot of room to improve.

On the other hand, three providers (Neeva, Google Assistant, and Brave Search), all of which have internet access, performed worse than ChatGPT 3.5 without internet access.

Additionally, it is noteworthy that the previous state-of-the-art AI, Google Assistant, was outperformed by almost every competitor, many of which are relatively small companies. This speaks to the remarkable democratization of the ability to answer questions on the web, enabled by the recent advancements in AI.

The main limitation of the top answering engines at this time seems to be the quality of the underlying ‘zero-shot’ search results available for the verbatim queries. When humans perform the same task, they will search multiple times, adjusting the query if needed, until they are satisfied with the answer. Such an approach still needs to be implemented in any tested answering engine. In addition, the search results returned could be optimized for use in answering engines, which is currently not the case.

In general, we are catiously optimistic with Kagi’s present abilities, but we also see a lot of opportunities to improve. We plan to update the test results and release the questions in 6 months as we compare the progress made by the field.

What are we launching today?

We are launching three new search features today: Summarize Results, Summarize Page, and Ask Question about Document.

All three features are activated on demand as per our AI integration philosophy, and do not incur any cost towards the user unless used. Usage for these features is converted into search usage and is discussed in more detail below.

Summarize results

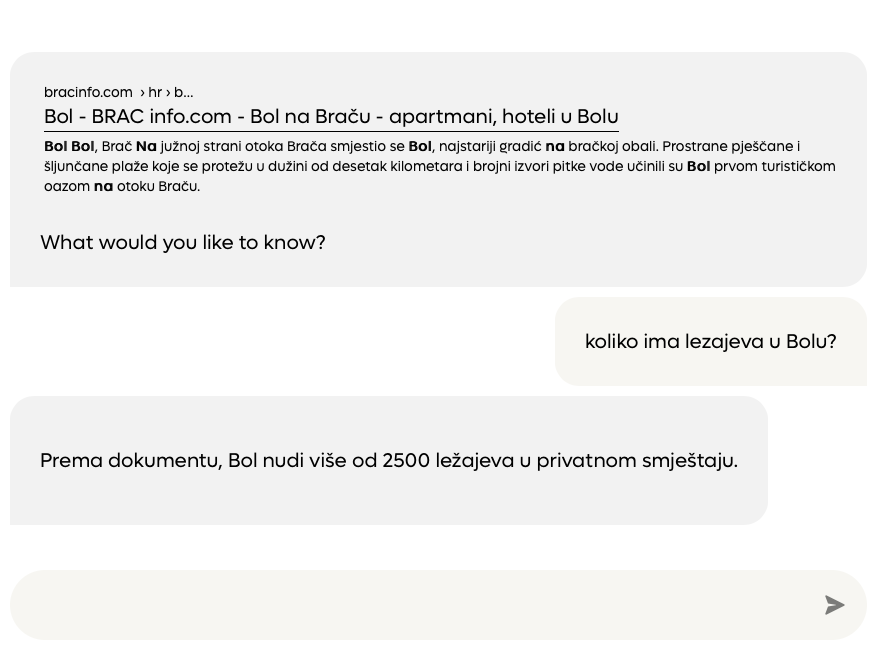

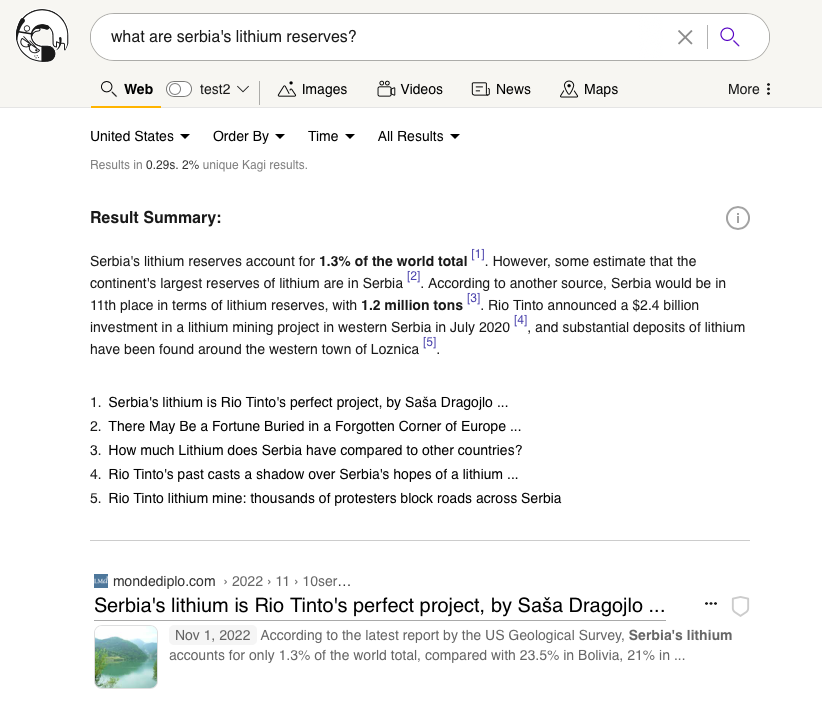

We’re introducing a new feature called “Summarize Results.” It helps users quickly grasp the main points of available results in the context of the query, without visiting each result, by providing a concise summary with citations.

It helps transform information in a search page like this:

To a much more ready-to-digest form:

Summarize Results is available today for all Kagi search users.

Summarize Page

This is the first feature currently unique to Kagi (among other search engines) and allows the user to summarize any page appearing in the web results.

In the background, this uses our Universal Summarizer technology and can summarize almost any web content in any format and length. The feature can be found in the … menu of any search result.

When invoked, it will produce a page summary, if possible.

This summary will take about 5-10 seconds, which we are still looking for ways to improve.

Summarize Page is available today for all Kagi search users.

Ask Questions about Document

Our second unique feature is called Ask Questions about Document. This allows the user to enter interactive chat mode with any document in the search results and ask questions about it.

If you wonder if this works in other languages, the answer is yes!

Ask Questions about Document is available today for all Kagi search users.

AI usage pricing

Using AI tools will be treated as search usage, according to your Kagi plan.

In general, we typically count 1,000 tokens processed by AI as one search. In practice, this means that:

- Summarize results will typically use 700-800 tokens, or less than 1 search. Cached summaries will be free.

- Ask Questions about Document will typically use 500-1000 tokens per question (depending on document size)

- Summarize Page uses twice the tokens. A typical blog post or an article will have 500 - 2500 tokens resulting in 1-5 searches when summarized. We set a cap on maximum charged tokens to 10,000 even if the document has more than that. For example, if you are summarizing an entire book with 100,000 words, it will cost you ~10 searches. Furthermore, if the summary was cached (somebody requested it previously), it will be free.

Trial accounts have a limit of 10 monthly interactions with AI. Legacy professional plan has a limit of 50 monthly interactions with AI. Ultimate plan has a fair use policy of 300 monthly interactions with AI.

Next up: Universal Summarizer

For those of you waiting for Universal Summarizer, we plan to officially launch it next week on Wednesday, March 22nd, as a standalone web app for all Kagi users and as an API.

After that: Family plans

A week after than, on March 29, we will be launching our Family plans.

And after that we are going back to the backlog of over 200 ideas we have to make search better! Thanks for using kagifeedback.org to post your feature suggestions and bug reports.

Frequently Asked Questions

Q. Is the comparison table based on just launched Kagi AI capabilities?

A. Yes. Kagi results are effectively output of the just launched “Summarize Results” feature.

Q. If it is not possible to say whether AI output is correct or not, how is it useful?

A. This output is best used to enhance the search experience and supplement one’s knowledge, rather than replacing it entirely. Think about it as a research intern where they can still digest a lot of information for you, but you may want to double-check the critical parts.

Q. Can you give an overview of how the Universal Summarizer works? How do you get around the context length limitation?

A. We use a completely different approach for summarization that does not have any token limit.

Q. Did you develop all your AI tools from scratch or were they based on something like davinci or some opensource LLM?

A. It is a mix of models. We closely follow state-of-the-art development in question answering and summarization and adjust our usage of models as needed (both in-house and external). Models come and go. It is the goal that matters, which is to have the best experience for the user in the context they are using it.

Q. Are the new AI tools limited to English only? And if so are there any plans to expand them to other langauges?

A. No, they are not. They should work equally well in most languages.

Q. I have a great idea for an AI feature in search!

A. We are all ears. Please let us know through kagifeedback.org.

Q. Is Kagi still bootstrapped?

A. Yes. We plan to do our “Familiy, Friends and Users” investment round sometime in April-May time frame. You can express interest by signing up here.