Kagi Assistant is now available to all users!

At Kagi, our mission is simple: to humanise the web. We want to deliver a search experience that prioritises human needs, allowing you to explore the web effectively, privately, and without manipulation. We evaluate new technologies not for their acclaim but for their true potential to support our mission.

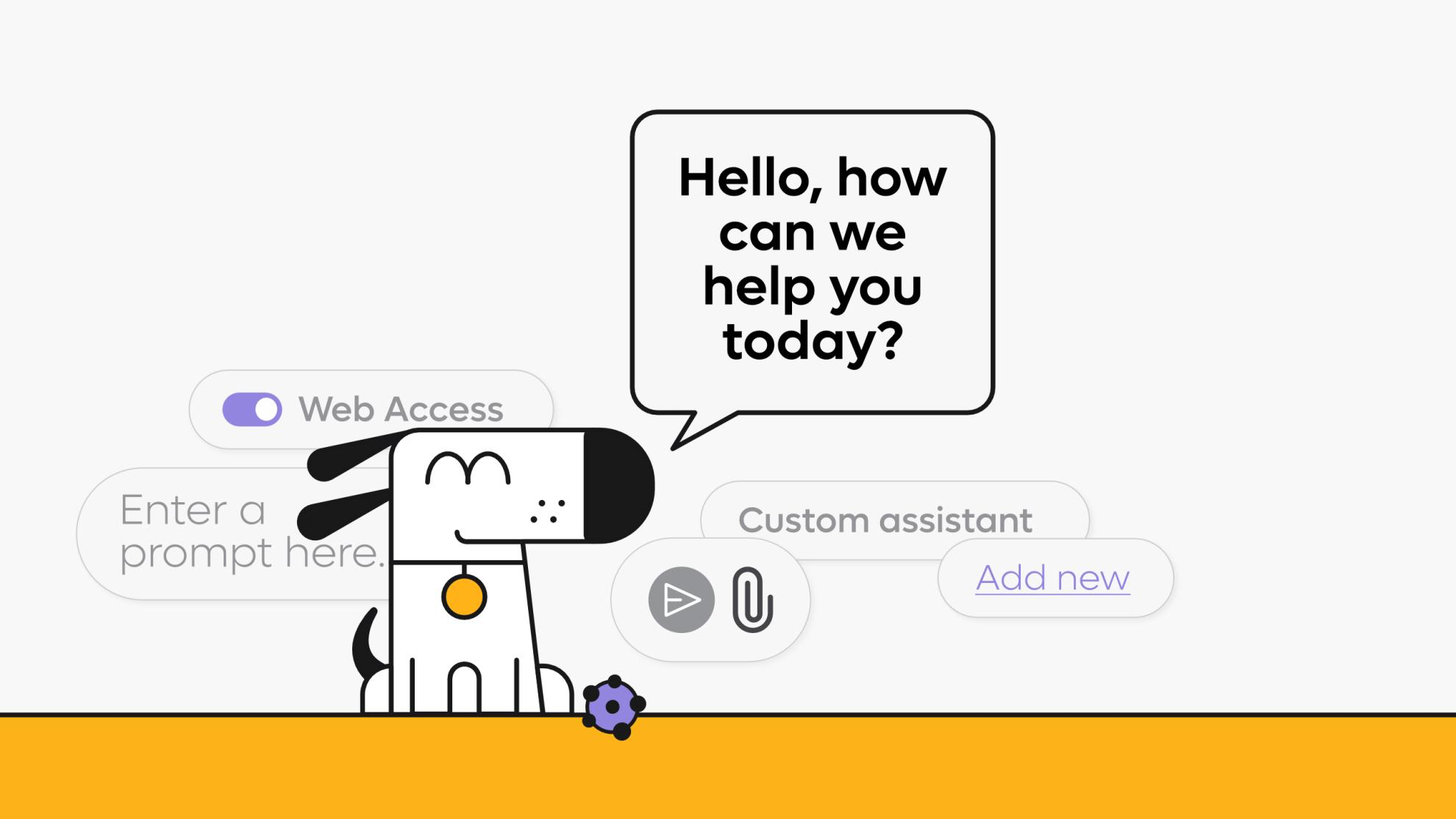

Since its launch, Kagi Assistant has been a favorite for many users as it allows access to world top large language models, grounded in Kagi Search, all in one place in one beautiful user interface - and all that for +$15/mo upgrade from our Professional plan that provides unlimited Kagi Search.

Today, we’re excited to announce that Kagi Assistant is now available to all users across all plans, expanding from its previous exclusivity to Ultimate subscribers, as an added value to all Kagi customers, without increasing the price.

An important note: We are enabling the Assistant for all users in phases, based on regions, starting with USA today. The full rollout for ‘Assistant for All’ is scheduled to be completed by Sunday, 23:59 UTC.

Our approach to integrating AI is shaped by these realities and guided by three principles:

- AI serves a defined, search-relevant context: Kagi Assistant is a research aid.

- AI enhances, it doesn’t replace: Kagi Search remains our core offering, functioning independently. Kagi Assistant is an optional tool you can use as needed.

- AI should enhance humanity, not diminish it: Our goal is to improve your research process by helping you synthesise information or explore topics grounded in Kagi Search results, not to replace your critical thinking.

Kagi Assistant embodies these principles, working within the context of Kagi’s search results to provide a new way to interact with information. It’s built to make research easier while respecting your privacy and AI’s limits.

By making Kagi Assistant available to everyone, we’re giving all users the choice to explore this capability as part of their Kagi toolkit - at no additional cost to their subscription. Use it when and how it suits your workflow, knowing it’s built with privacy, responsibility, and human-centric values at its core.

Let’s talk about the specifics!

AI grounded in Kagi search, guided by you

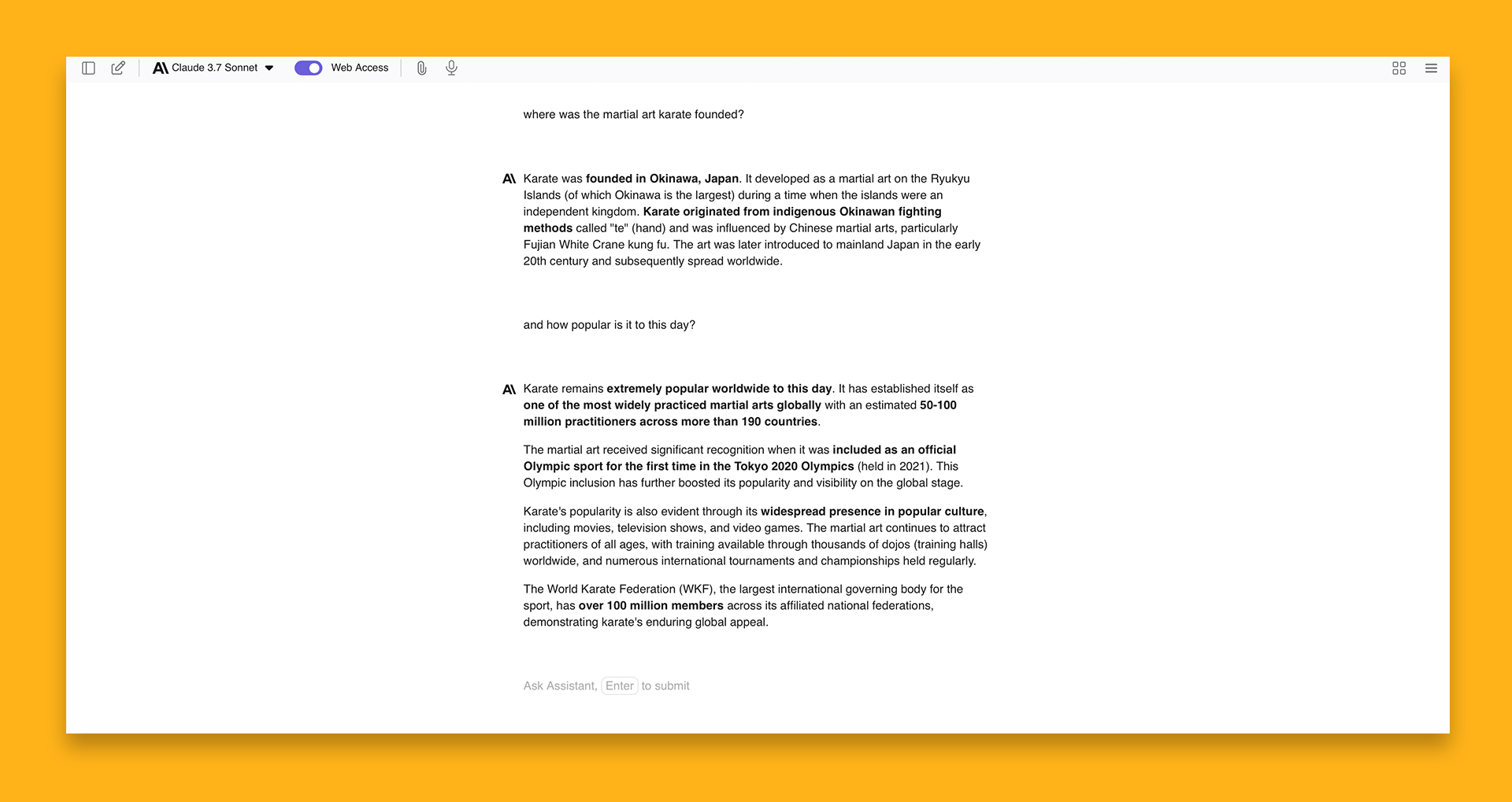

When you enable web access, the Assistant has access to Kagi Search results. It will also respect your personalised domain rankings and allows the use of Lenses to narrow search scope.

Or, if you’d prefer to discuss directly with the model, you can also turn off web access. It also supports file uploads, allowing you to provide additional context or information for your queries.

Custom assistants tailored to your needs

Create specialized assistants with unique instructions, defining their purpose, context, and web access preferences. Need help with coding, grammar reviews, or diagnosing an issue with your classic VW Bus? Build an assistant for it.

Pro-tip: assign a custom bang (!name) for instant access via your browser’s search bar.

Refine and redirect with editing

Conversations don’t always go as planned. If a response misses the mark, Kagi Assistant lets you edit prompts, switch models, or adjust settings mid-thread. This ensures you stay in control and can redirect the conversation without starting over.

Privacy as a foundation

Your privacy is our priority. Assistant threads are private by default, automatically expire based on your settings, and your interaction data is not used to train AI models. This applies to both Kagi and third-party providers, under strict contractual terms.

Please see Kagi LLMs privacy for additional information.

A note on our fair-use policy

Providing powerful AI tools requires significant resources. To ensure sustainability, we’re starting to enforce our fair-use policy.

Basically our policy states that you can use AI models based on your plan’s value. For example, a $25 monthly plan allows up to $25 worth of raw token cost across all models (there is a 20% built-in margin that we reserve for providing searches, development and infrastructure for the service). From our token usage statistics, 95% of users should never hit this limit.

While most users won’t be affected, those exceeding the generous threshold will have the possibility to renew their subscription cycle instantly. Soon, we’ll introduce credit top-ups for added flexibility. This approach ensures a fair, user-funded model while maintaining quality service and is a simple way to control usage, compared to arbitrary usage limits found in other services.

Your favourite models are waiting for you

Choose from a range of leading LLMs from OpenAI, Anthropic, Google, Mistral, and more. You can switch models mid-thread and explore their performance through our regularly updated open-source LLM benchmark. Choice of models in non-Ultimate plans will be limited compared to our full offering in the Ultimate plan, please see below.

Access to your favourite LLMs makes Kagi Assistant mould to your requirements and query customisations, so we feature an array of models for you to choose from.

| Model Name | Plan |

|---|---|

| GPT 4o mini | All |

| GPT 4.1 mini | All |

| GPT 4.1 nano | All |

| Gemini 2.5 Flash | All |

| Mistral Pixtral | All |

| Llama 3.3 70B | All |

| Llama 4 Scout | All |

| Llama 4 Maverick | All |

| Nova Lite | All |

| DeepSeek Chat V3 | All |

| GPT 4o | Ultimate |

| o3 mini | Ultimate |

| o4 mini | Ultimate |

| GPT 4.1 | Ultimate |

| ChatGPT 4o | Ultimate |

| Grok 3 | Ultimate |

| Grok 3 Mini | Ultimate |

| Claude 3.5 Haiku | Ultimate |

| Claude 3.7 Sonnet | Ultimate |

| Claude 3.7 Sonnet with extended thinking | Ultimate |

| Claude 3 Opus | Ultimate |

| Gemini 1.5 Pro | Ultimate |

| Gemini 2.5 Pro Preview | Ultimate |

| Mistral Large | Ultimate |

| Llama 3.1 405B | Ultimate |

| Qwen QwQ 32B | Ultimate |

| Nova Pro | Ultimate |

| DeepSeek R1 | Ultimate |

| DeepSeek R1 Distill Llama 70B | Ultimate |

Explore further

This is just the beginning for Kagi Assistant. Explore more in our documentation.

Happy fetching,

Team Kagi.

F.A.Q.

Q: Does using a less costly model (like DeepSeek) compared to larger ones use fewer credits?

A: Yes. The fair use policy calculates usage based on the actual cost charged by the model provider. Therefore, using smaller, less expensive models will allow for significantly more token usage compared to larger models.

Q: Does Kagi receive discounted rates from AI model providers?

A: No, Kagi does not receive discounts. However, we utilize smart caching techniques for the models to reduce operational costs, and these savings are passed on to the user.

Q: Why did Kagi start enforcing the fair use policy?

A: The policy was enforced due to excessive use. For instance, the top 10 users accounted for approximately 14% of the total costs, with some individuals consistently using up to 50 million tokens per week on the most advanced models. Our profit margins are already quite narrow. 95% of users should never hit any usage limits.

Q: What is the specific usage limit?

A: The limit corresponds directly to the monetary value of your Kagi plan, converted into an equivalent token amount. For example, a $25 plan provides $25 worth of token usage. This calculation includes a 20% margin for Kagi to cover search provision, development, and infrastructure costs. Savings achieved through prompt caching and other optimizations are passed on to you.

Q: Where can I view my token usage?

A: Currently, you can monitor your token usage on the Consumption page: https://kagi.com/settings?p=consumption. We plan to display cost and interaction details more prominently soon, potentially on the billing page or directly within the Assistant interface.

Q: I can not access Assistant!

A: We are doing staged rollout beginning with USA, full rollout scheduled by Sunday, 23:59 UTC. This will include other regions and even the trial plan.