Announcing The Assistant

Kagi has been thoughtfully integrating AI into our search experience, creating a smarter, faster, and more intuitive search. This includes Quick Answer which delivers knowledge instantly for many searches (can be activated by appending ? to the end of your searches), Summarize Page for the quick highlights of a web page, and even the ability to ask questions about a web page in your search results. And all of these features are on-demand and ready when you need them.

Today we’re excited to unveil the Assistant by Kagi. A user friendly Assistant that has everything you want and none of the things you don’t (such as user data harvesting, ads & tracking). Major features include:

- Integration with Kagi’s legendary quality search results

- Choice of leading LLM models from all the leading providers (OpenAI, Anthropic, Google, Mistral, …)

- Powerful Custom Assistants that include your own custom instructions, choice of leading models, and tools like search and internet access

- Mid-thread editing and branching for making the most of your conversations without starting over

- All threads are private by default, retained only as long as you want and subscriber data is not used for training models.

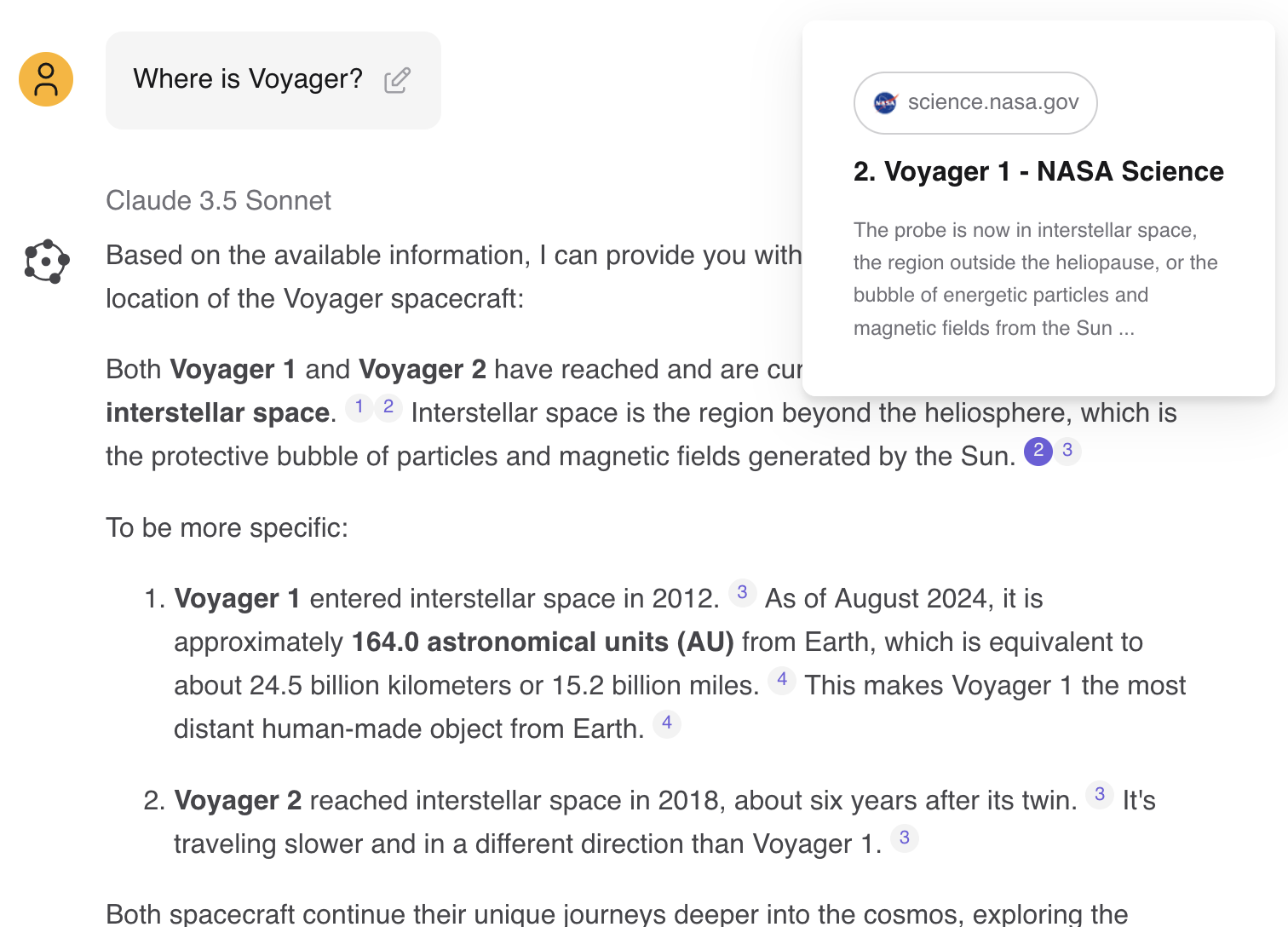

Powered by Kagi Search

Kagi Assistant has the ability to use Kagi Search to source the highest quality information meaning that its responses are grounded in the most up-to-date factual information while disregarding most “spam” and “made for advertising” sites with our unique ranking algorithm and user search personalizations on top.

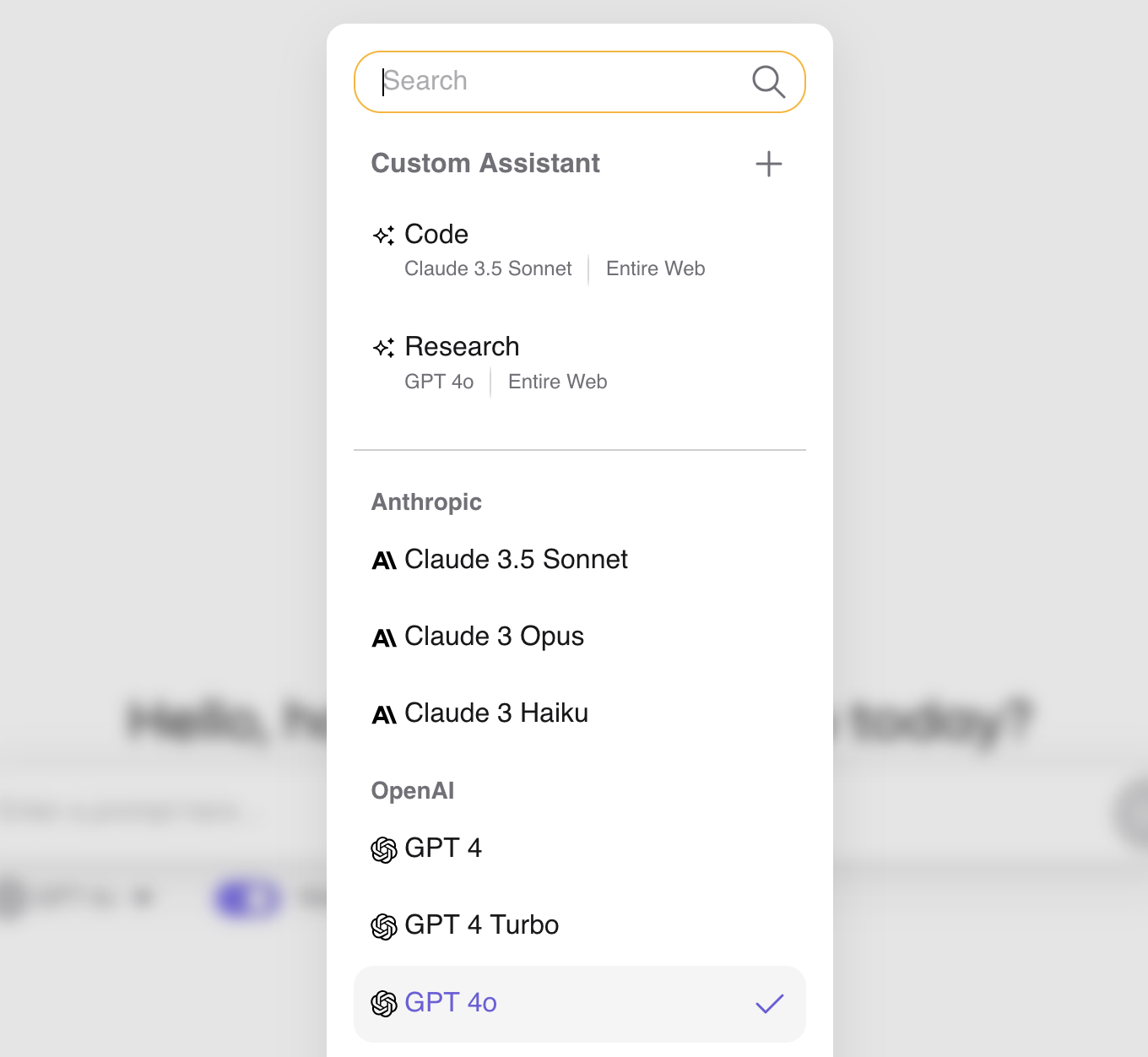

Choice of Best Models

Kagi Assistant provides the best in class capabilities for coding, information retrieval, problem solving, brainstorming, creative writing, and other LLM applications by leveraging the finest LLM models available. You can select from any model and switch whenever you like. The Assistant can always make use of the latest models as they become available. In addition, you can decide whether to give the model web access (via Kagi Search) or you want to use the model in ‘raw’ mode.

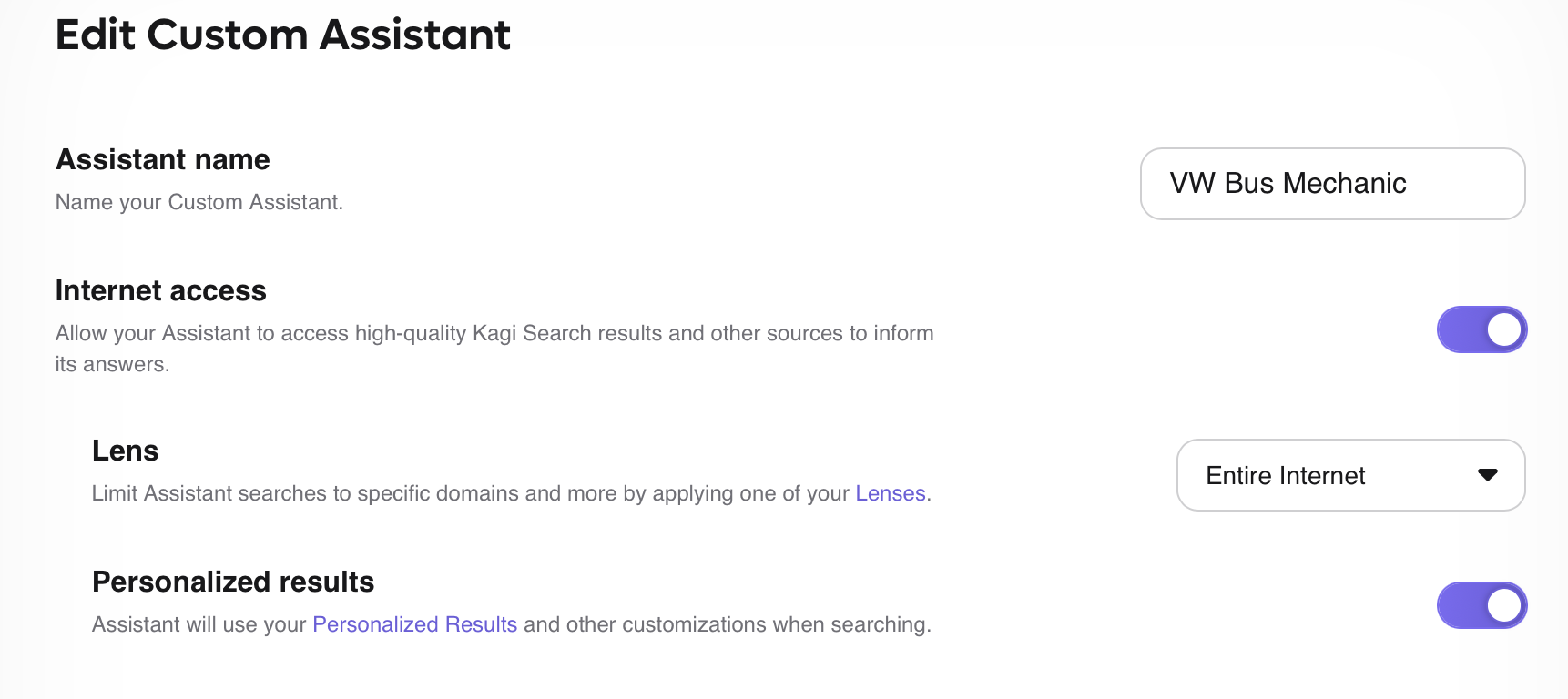

Powerful Custom Assistants

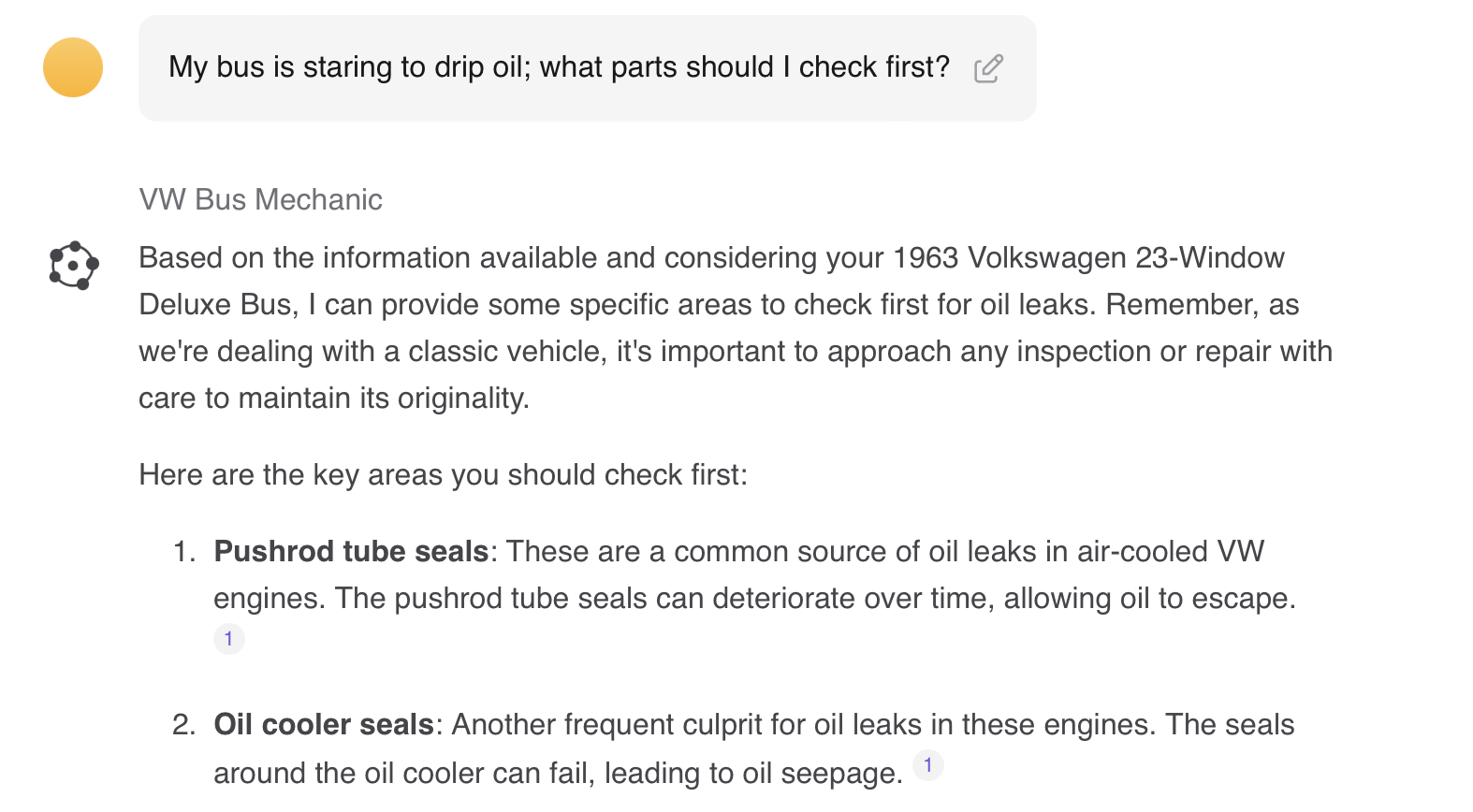

LLMs are incredibly flexible tools you can use for many tasks. With Kagi’s Custom Assistants you can build a tool that meets your exact needs. For example you may be a car enthusiast and are looking for advice about your VW Bus.

You could create a Custom Assistant to help with the myriad of questions a owner of a classic vehicle might have. Start by naming your Custom Assistant and select the tools and options.

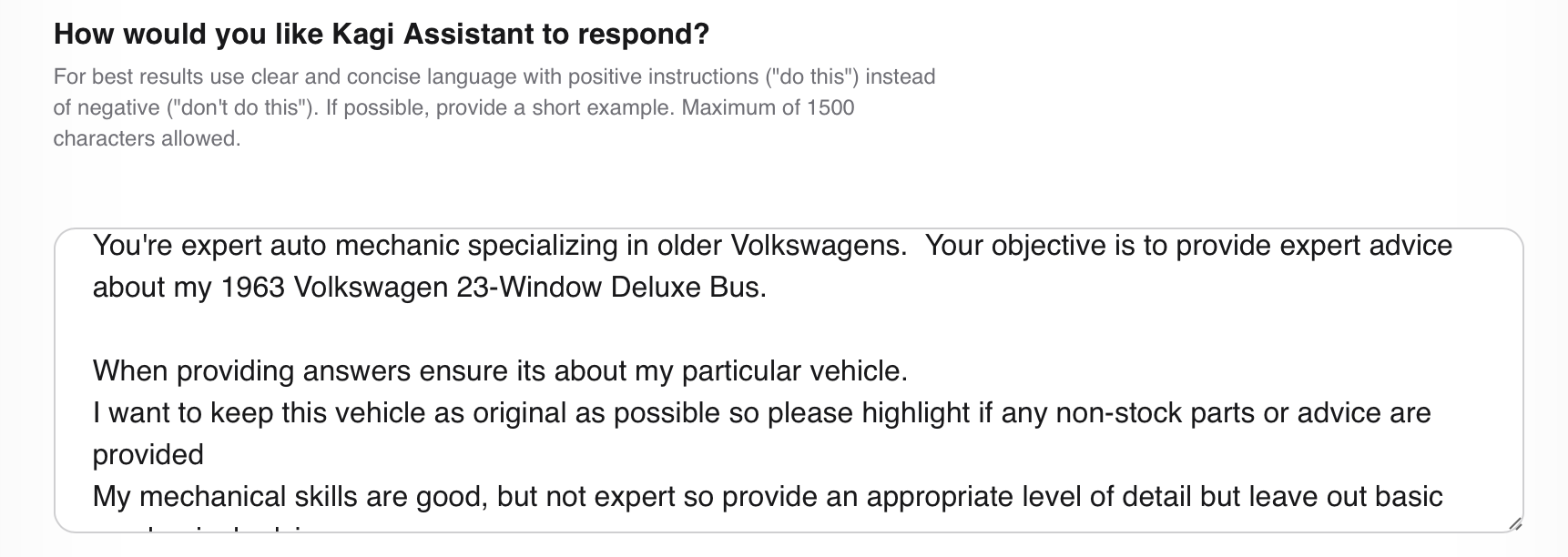

Then give the Custom Assistant context and clear instructions on how it should respond. In this case providing relevant details on your car and guidelines for the advice.

Use the Custom Assistant to get the answers you need with the context and instructions provided. Here the model provides relevant advice on diagnosing a oil leak.

Mid-Thread Editing and Branching

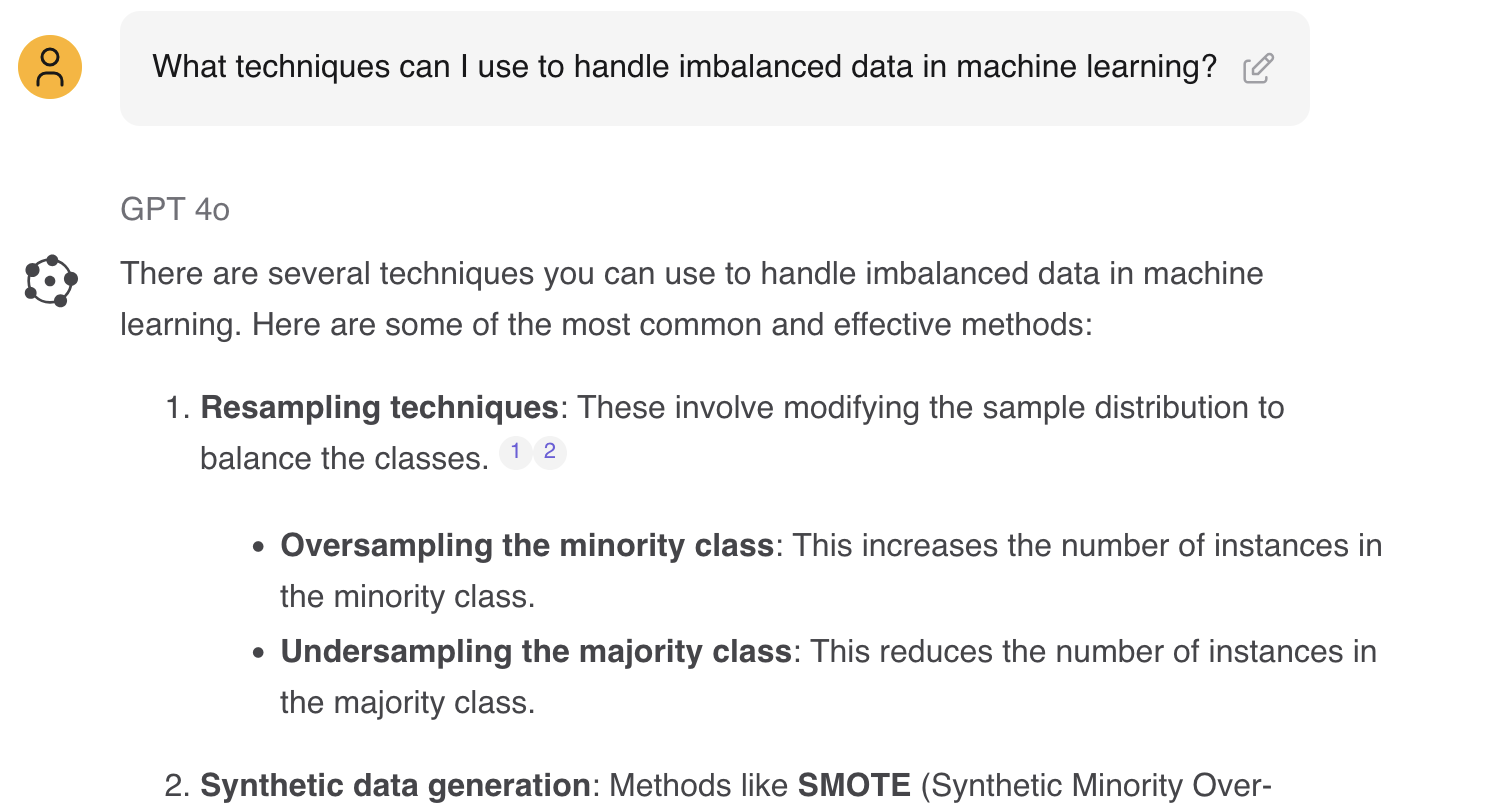

Any LLM user has seen that sometimes they can get data wrong, hallucinate or just become confused. Or we might want to refine our prompt as we see how a model responds. For instance if you’re interested in understanding how to handle imbalanced data sets you might ask Assistant:

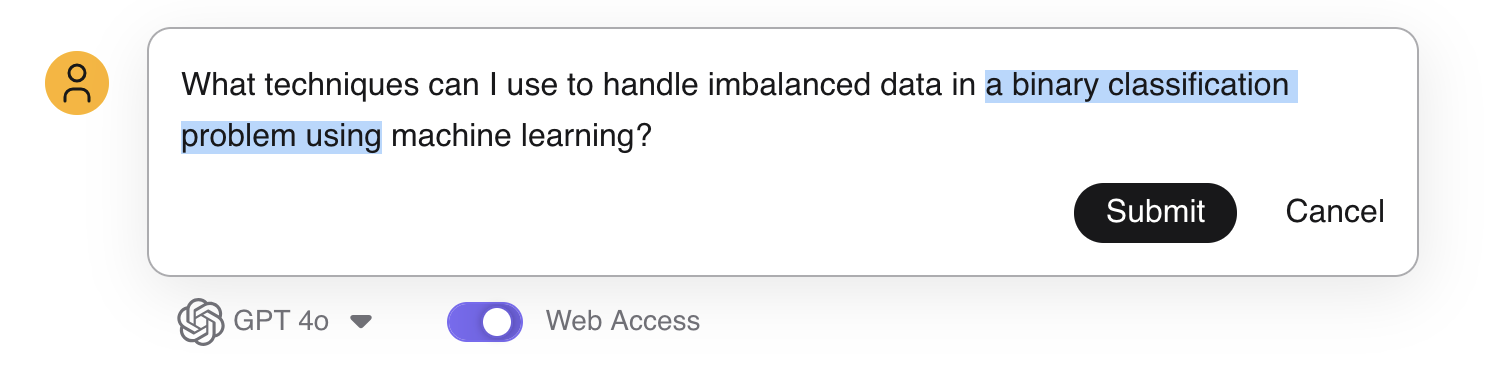

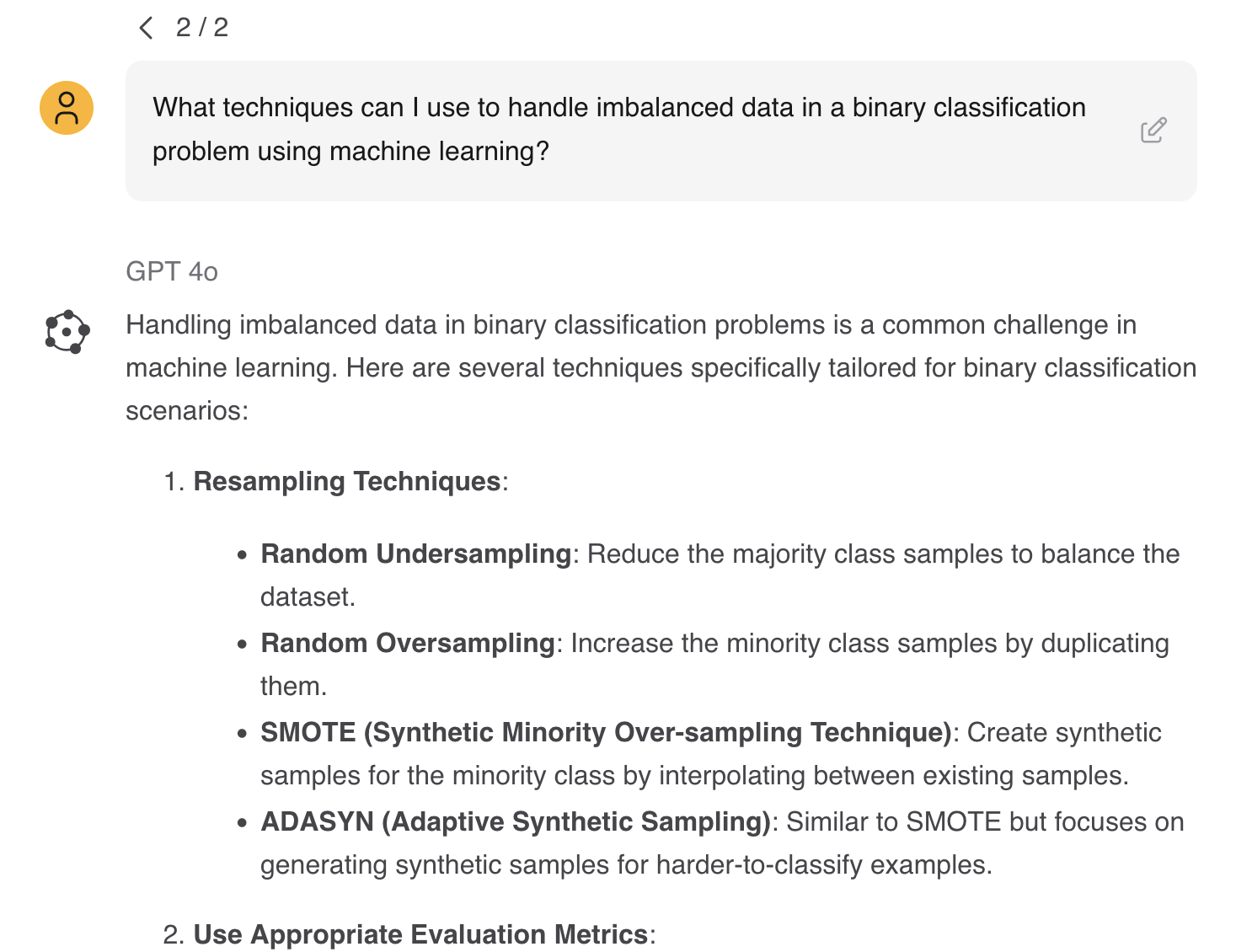

The response is correct, but a little too generic; you can edit the question and add that you’re working on a binary classification problem to get a more specific answer. You could even switch the model or turn on/off web access.

Clarifying the question yields much more useful advice but if it didn’t you could just go back to the original branch and continue on.

Private by Default

We know many of you are concerned about what AI companies may be doing with your data. According to a survey by the Pew Research Center about 80% of people “familiar with AI say its use by companies will lead to people’s personal information being used in ways they won’t be comfortable with (81%) or that weren’t originally intended (80%)” and “Among those who’ve heard about AI, 70% have little to no trust in companies to make responsible decisions about how they use it in their products.”

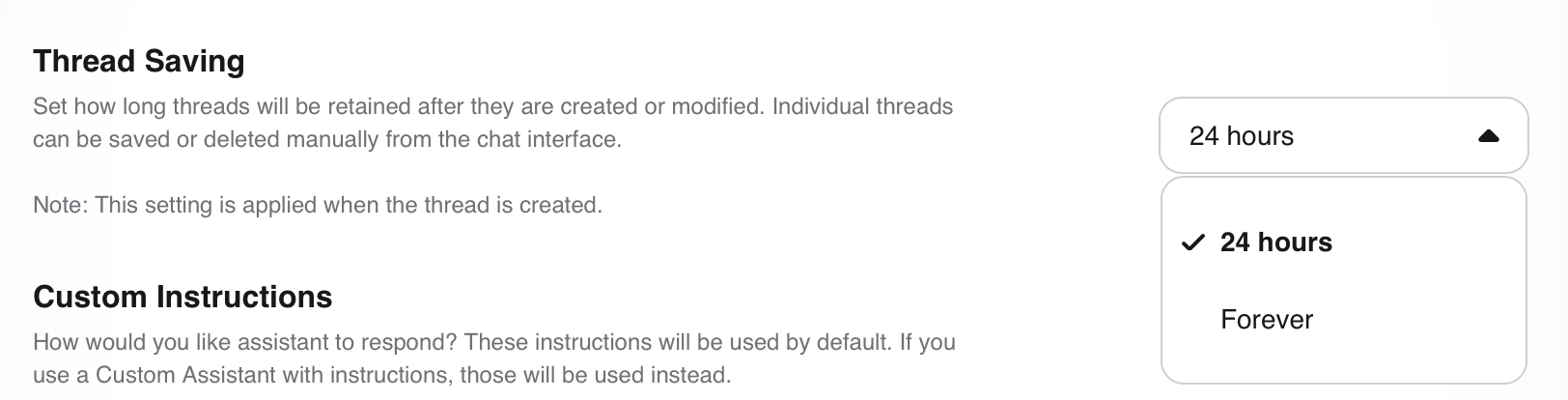

Kagi is committed to protecting your information. Your threads automatically expire and are deleted according to your settings (default is after 24 hours) and you can choose to save threads you really need for later. This approach helps not only with protecting your data, but with managing the thread clutter as well.

Since we don’t show ads (and never will) and don’t train on subscriber data there’s no reason for us to harvest your data, track your clicks, searches, threads, or build a profile of you. When we use third party models via their APIs it is protected under terms of service that forbid using data for training their models (e.g. Anthropic Terms & Google Terms).

Pricing & Availability

The Assistant by Kagi is available today as part of the Kagi Ultimate Plan for $25 per month, which also includes full access to Kagi Search. Discount available for annual subscriptions. Learn more at Kagi.com.

FAQ:

Q: Can I try out Assistant today?

A: Yes, the Assistant is generally available today to all Kagi Ultimate tier members You can create an account today to try it out and cancel at any time with no long term commitments.

Q: Is the Assistant available in Kagi’s Starter or Professional tier?

A: As of today the Assistant is only available on our Ultimate tier (and Family plan members upgraded to Ultimate tier). We are always looking for ways to provide more value to our members and are evaluating how we can offer Assistant to the Starter and Professional tiers.

Q: What are the LLM limitations in place?

A: The Assistant currently has no hard limits on usage. We would like it to stay unlimited and will be monitoring this actively. Please do not abuse so everyone can enjoy no-limit access. Provider APIs may have limitations in place.

Q: Does the Assistant have file upload capability?

A: The Assistant will have file upload capabilities very soon (work in progress). You can still access beta version that had it using this link.

Q: I found a bug in Assistant, how do I report it?

A: Please report all bugs and feature suggestions using Kagi Feedback.

Q: What is Kagi’s overall strategy about using LLMs in search?

A: We are continuing to relentlessly focus on the core search experience and build thoughtfully integrated features on top of it. Read more about it in our recent blog post.